What Changed and Why It Matters

AI agents left the lab and hit production. Enterprises now run agents in code, ops, and growth workflows every day. New reporting shows the risks are outpacing controls.

The immediate problem: secrets are leaking. Coding assistants and agents are exposing credentials, repos, and private data across tools and contexts. Meanwhile, “shadow AI” is spreading—agents created outside central IT with real access and no oversight.

Why it matters: the risk moved from models to agents. Agents act, integrate, and connect to systems. When they fail, they fail with your keys.

“82% of enterprises use AI agents daily, but weak governance and ownership gaps expose major security risks.”

“Taken together, these cases show that secrets leakage is not a single flaw, but a pattern of failures across multiple tools and contexts.”

The Actual Move

Here’s what the ecosystem is doing, and why it signals a shift to agent-native governance:

- Research flagging real leaks: Wiz found that 65% of leading AI companies leaked API keys or credentials on GitHub, exposing private data and model assets. This is a clear supply-chain and developer workflow risk, not a theoretical one.

- Field reports from coding assistants: Knostic documents multiple cases where AI coding tools surfaced secrets across chat, context windows, and code suggestions—revealing a repeatable failure pattern rather than one-off bugs.

- Enterprise wake-up calls: BankInfoSecurity stresses that agents amplify mistakes because they act fast and at scale—and calls for frameworks that monitor behavior and enforce controls.

- Governance products emerge: Credo AI positions an “Agent Registry” so teams aren’t “flying blind” as autonomy increases—signaling a move to inventory, ownership, and policy for non-human actors.

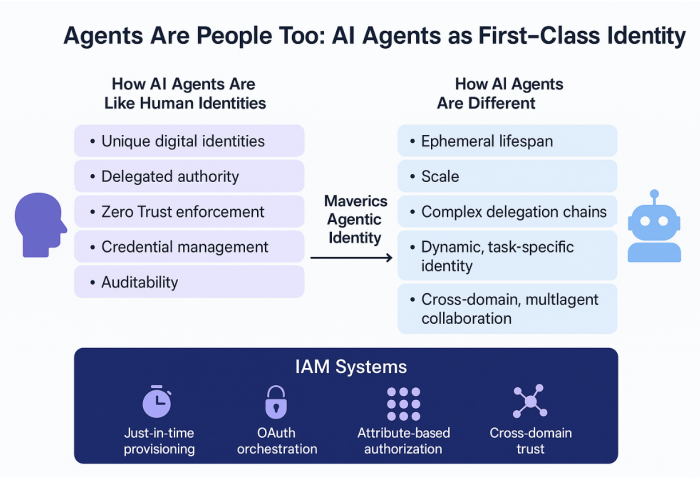

- Industry consensus builds: Security leaders are pressing on overprovisioned non-human identities, shadow AI, and the need to treat agents like first-class identities rather than features inside apps.

- Operator reality checks: Builders and operators highlight that the “last mile” to effective agent use is solving security, governance, and privacy—before scaling automation.

“AI agents can act quickly, which increases the impact of mistakes. Enterprises need a framework that monitors agent behavior…”

“The future of AI governance is agent-native—and the Credo AI Agent Registry ensures you’re not flying blind in the era of autonomy.”

The Why Behind the Move

- Model: Agents are not just inference. They chain tools, call APIs, read repositories, and write code. That creates new data flows—and new exfil paths—that traditional app or model governance does not catch.

- Traction: Daily enterprise use of agents forces buyers to ask basic questions: Who owns this agent? What can it touch? How do we roll it back? Without answers, adoption stalls.

- Distribution: Vendors that package observability, permissioning, and audit win enterprise deals. Governance features are now part of core product, not add-ons.

- Timing: Two forces converged—developer acceleration via coding assistants and the rise of LLM-native automation. Both increased secret sprawl and over-scoped tokens.

- Competitive dynamics: The next winners won’t be the most “autonomous,” but the most governable. Procurement now screens for registry, RBAC for non-human identities, logs, and egress controls.

- Strategic risks: Over-blocking kills utility. Under-governing creates breach multipliers. The trap is thinking policy documents solve runtime behavior. They don’t.

Here’s the part most people miss: agent risk is identity-and-secrets risk wearing a new interface. Treat agents as identities, not features.

What Builders Should Notice

- If an agent can act, it must have an owner, a purpose, and a budget of permissions.

- Secrets hygiene is now product strategy. Rotate, scope, and log by default.

- Inventory beats investigation. You can’t govern agents you can’t see.

- “Agent-native” equals registry, non-human RBAC, audit trails, and egress guardrails.

- Ship guardrails as UX. Make least privilege, dry runs, and approvals the easy path.

Buildloop Reflection

Clarity compounds. Treat every agent as a first-class identity—or treat every incident as a lesson you could’ve learned cheaper.

Sources

- Knostic — AI Coding Assistants are Leaking Secrets: The Hidden Risk …

- LinkedIn — Managing AI agents: the governance gap

- The Hacker News — Governing AI Agents: From Enterprise Risk to Strategic Asset

- YouTube — The Security Gap When AI Agents Have Access – Governance …

- Reddit — How are you addressing security, governance, and privacy …

- BankInfoSecurity — AI Governance Risks Rise as Enterprises Scale Agents

- SecureWorld — Two-Thirds of Leading AI Companies Leaking Secrets on …

- Blott — How Code Secrets Leak Through AI Models

- The AI Innovator — Shadow AI: The Next Governance Gap

- Credo AI — The Era of AI Agents Has Arrived—and Your Governance Strategy Needs to Catch Up