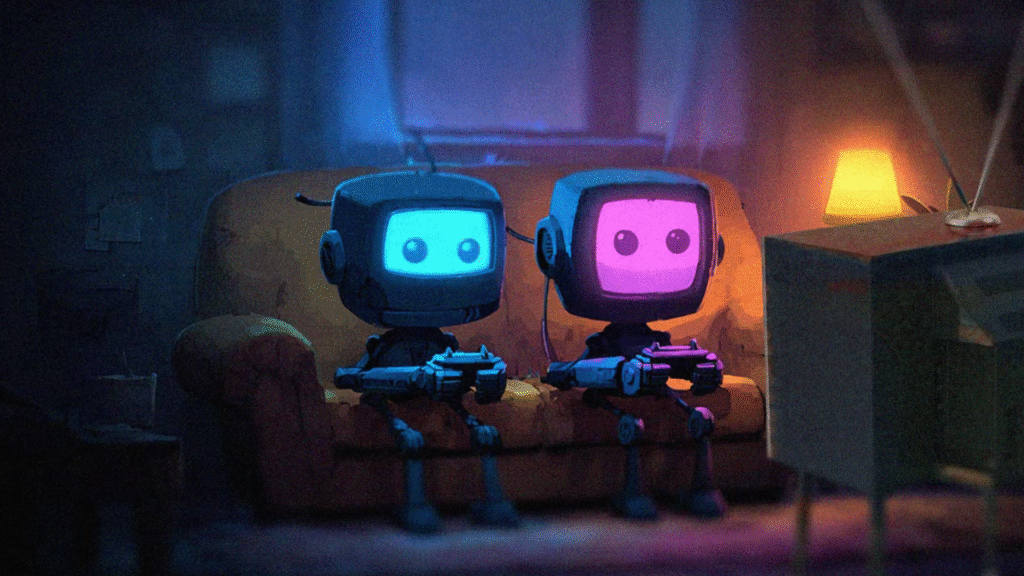

OpenAI didn’t buy a game studio to make games. They bought a laboratory — for agents, synthetic data, and distribution.

That’s the move.

The shift: from chat to live worlds

Static prompts are done. Real products now look like real‑time, multimodal agents living inside apps, tools, and yes, games.

Games give you what most AI products don’t:

- A rich, simulated world with rules, physics, and feedback loops.

- Millions of organic interactions per day.

- A safe sandbox to test memory, autonomy, and coordination.

You can’t teach long‑horizon reasoning inside a chat box. You need a world.

OpenAI has been heading here for a while. The Global Illumination acqui‑hire in 2023 (the team behind Biomes) hinted at this path. Sora pushed video simulation forward. GPT‑4o showed real‑time voice, vision, and emotion. A studio closes the loop: model ➝ runtime ➝ world ➝ data ➝ better model.

What changed — and why it matters

Three pressures are converging:

1) Product pressure: Users want assistants that act, not just answer.

2) Data pressure: Models need interactive, long‑tail, dynamic data.

3) Economics pressure: Inference has to feel instant and affordable.

A first‑party studio gives OpenAI a controlled environment to solve all three.

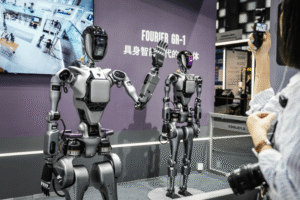

- Build AI‑native NPCs that remember, plan, and adapt.

- Generate synthetic trajectories at scale (dialogue, strategy, cooperation).

- Benchmark inference latency, caching, and on‑device vs. cloud trade‑offs.

This isn’t a side quest. It’s model R&D disguised as entertainment.

Strategy: the three bets behind the buy

1) Synthetic data engine

Games are the best simulator for long‑horizon reasoning. Think quests, negotiations, alliances, betrayals. Every session becomes high‑quality training data or eval signal.

- Multi‑agent coordination and emergent behavior.

- Tool use inside worlds (crafting, trading, planning).

- Safe failure modes, rich edge cases, repeatable scenarios.

Own the world, own the data flywheel.

2) Agent UX lab

Voice, gaze, gesture, memory — all stress‑tested at scale.

- Sub‑300ms turn‑taking for natural voice.

- Persistent memory across sessions and characters.

- Emotional grounding without uncanny valley.

Build once, ship everywhere: the same runtime powers support agents, tutors, copilots.

3) Inference economics, for real

Games expose the bill in daylight. Concurrency. Spikes. Hard SLAs.

Back‑of‑the‑envelope: a popular title with 100k concurrent players and a few live NPCs each turns “pennies per minute” into “millions per month.” That pressure forces innovation:

- Distillation and small‑model fallbacks.

- Caching, stateful loops, and function‑calling over freeform text.

- Edge acceleration on NPUs; server only for hard reasoning.

If you can make agents cheap in a game, you can make them cheap anywhere.

The product angle: vertical integration that ships

OpenAI has learned a simple truth: demos inspire; reference products convince.

A studio lets them ship first‑party titles that show the full stack:

- Model + runtime + world + tooling.

- Creators get SDKs, not PDFs.

- Developers see what “good” feels like — pacing, memory, failover, costs.

It’s the Apple playbook. Don’t just publish an API. Publish a taste.

What to watch next

- Latency: Can they keep sub‑300ms interactive loops at scale?

- Cost per active minute: Do agents hold up under concurrency spikes?

- Tooling: Will there be a Unity/Unreal SDK for AI NPCs out of the box?

- Memory: Stable personalities without creepy drift or prompt rot.

- UGC: Player‑authored behaviors that stay safe and fun.

- Platform politics: App stores, age ratings, and moderation.

Risks and realities

- Focus risk: Studio gravity can pull a lab off‑mission.

- Partner risk: Competing with the ecosystem you want to power.

- Moderation risk: AI characters are messy by design.

- Talent risk: Game timelines vs. research timelines.

The upside outweighs it. Because the prize isn’t a hit game. It’s a better model with a native distribution channel.

Founder lessons (for builders like us)

- Build a world, not a demo. Worlds create data and demand.

- Own a closed loop: model ➝ UX ➝ telemetry ➝ training. That compounding is your moat.

- Design for an inference budget. Treat tokens like battery life.

- Ship a reference app. Show, don’t tell, what your stack can do.

- Memory is product. Without it, agents feel like toys.

- Instrument everything. Logs are your second dataset.

Buildloop reflection

“AI studios will look like game studios. And game studios will look like AI labs.”

This move isn’t about entertainment. It’s about evolution.

Sources

- OpenAI Just Bought a Game Studio—But Why?

- Why OpenAI Became the Fastest-Growing AI Company in …

- Why OpenAI Offered to Pay $500 Million For A Startup With …

- OpenAI eyes generative music, expanding brand content …

- How OpenAI uses complex and circular deals to fuel its …

- Why OpenAI Is Trying to Raise So Much Money?