What Changed and Why It Matters

Nigeria now counts 300+ active AI startups. Most can’t train models at home. They ship workloads to foreign clouds and data centers.

That’s the signal: talent and ideas are rising, but compute, power, and policy are lagging. It turns innovation into an import.

“Nigeria’s data centres are still years from supporting core AI workloads.”

Zoom out and the pattern becomes obvious. Across Africa, AI adoption is growing, but core enablers—reliable power, GPU-grade facilities, and structured training—are uneven.

“Only 31% of surveyed African universities offer dedicated AI programmes.”

Here’s the part most people miss: this isn’t just a hardware problem. It’s a system problem—education, energy, data center design, and national strategy have to move together.

The Actual Move

- Nigerian startups are exporting model training to overseas clouds and facilities due to limited local GPU capacity and power reliability.

- Industry voices warn the country could deepen its dependence on foreign infrastructure if the gap persists.

- Regional data center buildouts are accelerating, but operators caution that AI-grade, high-density capacity is not yet mainstream locally.

- The talent pipeline is constrained. A World Bank–referenced survey shows dedicated AI programs are still the minority across African universities.

- Funding is flowing into African AI—159 startups have raised $803M—but infrastructure lags demand.

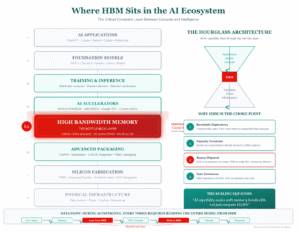

- Compute access is scarce: only 5% of Africa’s AI talent has the computational power it needs.

“159 African AI startups have raised over $803 million.”

“Only five percent of Africa’s AI talent has access to the computational power it needs.”

“Nigeria risks deepening its dependence on foreign AI infrastructure.”

The Why Behind the Move

Startups are optimizing for speed to market. When local compute is unreliable or unavailable, they rent GPUs abroad. It’s rational—and costly.

• Model

Building on foreign clouds lowers setup time and capital expense. It also pushes recurring spend and data governance offshore.

• Traction

Teams prioritize inference-first products and fine-tunes over full pretraining. It’s faster and cheaper while local compute matures.

• Valuation / Funding

Capital is entering the continent, but investors reward pragmatic infra choices today. Long term, margins compress if cloud spend dominates COGS.

• Distribution

Global clouds provide stable SLAs and familiar tools. Local alternatives win only when reliability, latency, and support match or exceed.

• Partnerships & Ecosystem Fit

Winners will partner across power providers, data centers, universities, and public agencies. AI-ready facilities need energy, cooling, and talent—together.

• Timing

AI demand is here now. Data center readiness is multi-year. The bridge strategy is hybrid: train abroad, serve regionally, and colocate as local capacity stabilizes.

• Competitive Dynamics

Sovereign AI ambitions matter. Countries with clear AI strategies, stable power, and GPU incentives will attract model training and data gravity.

• Strategic Risks

- Cost: FX volatility and foreign cloud bills erode margins.

- Compliance: Cross-border data flows create privacy and sovereignty risk.

- Lock-in: Tooling and credits can trap teams on one provider.

- Talent: Infra gaps accelerate brain drain.

What Builders Should Notice

- Build hybrid from day one: train abroad, cache or serve close to users.

- Treat power as a feature. Reliability and cost shape your unit economics.

- Form GPU co-ops or credits syndicates. Aggregate demand to lower cost.

- Invest in MLOps discipline. Efficiency beats raw compute when compute is scarce.

- Partner locally for resilience: universities, data centers, and power providers.

Buildloop reflection

“AI doesn’t just need talent—it needs sockets. Strategy is where they meet.”

Sources

- BusinessDay Nigeria — Nigeria’s 300 AI startups train models abroad due to infrastructure gap

- ODI (Overseas Development Institute) — Brains, bytes and bottlenecks: fixing Africa’s AI talent gap

- Medium — Bridging the AI Divide: Building Africa’s Future in the Age of Intelligent Machines

- Facebook (Africa is Home) — Nigeria’s🇳🇬 data centres are still years from supporting core AI workloads

- Startuplist Africa — The $803M Question – Can Africa Build AI or Just Use It

- AfricaBusiness.com — Racing Against the Talent Gap: Sustaining Africa’s Data Center Growth

- LinkedIn — Africa’s AI Strategy Gap: A Strategic Red Flag

- The Sun Nigeria — Experts: Nigeria risks falling behind digitally over AI infrastructure gaps

- Acumen — How entrepreneurs in Africa are shaping the future with AI