What Changed and Why It Matters

US export controls targeted Nvidia’s top AI chips. The goal: slow China’s AI progress. The result: a large, liquid gray market that keeps GPUs flowing—at a premium.

Reports show a persistent supply of A100/H100-class accelerators reaching China through intermediaries, refurb shops, and rented remote access. China is also steering state data centers away from foreign chips, accelerating domestic alternatives.

Here’s the pattern: scarcity didn’t kill demand. It professionalized the workaround.

Export controls don’t erase demand—they weaponize scarcity.

The Actual Move

This shift is not one announcement. It’s a system-level chain reaction:

- US controls tightened on Nvidia’s A100/H100 and later compliance variants (A800/H800). Channels to China were cut or narrowed.

- Media reports indicate China moved to ban foreign-made AI chips from state-funded data center projects. This pushes buyers toward domestic suppliers, especially Huawei’s Ascend line.

- Nvidia pushed back on diversion: stricter KYC, spot checks, and identity verification to curb unauthorized resales and re-exports.

- A thriving gray market emerged. Smugglers, front companies, and brokers rerouted supply via Hong Kong and Southeast Asia. Prices increased materially above official quotes.

- Shenzhen repair shops scaled up, refurbishing and resurrecting banned accelerators. Some reportedly process hundreds of units monthly, maintaining supply and uptime.

- Chinese AI teams continued accessing banned GPUs indirectly—renting time on overseas racks or through third parties. Compute moved, demand didn’t.

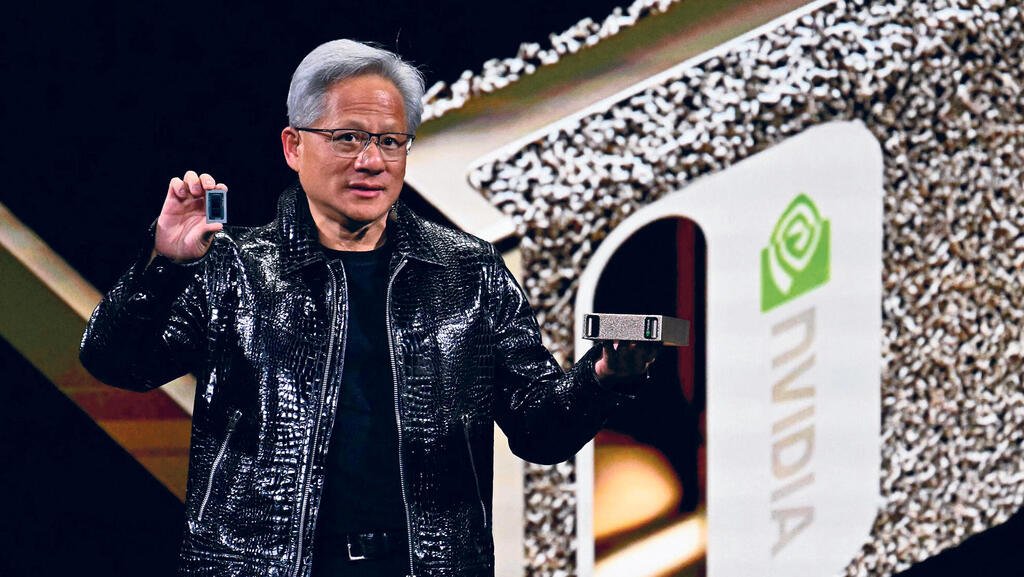

- Nvidia leadership publicly expressed disappointment at reported Chinese bans on its chips, underscoring a hard decoupling of state projects from foreign hardware.

The hardware didn’t disappear. It changed hands—and margins.

The Why Behind the Move

Zoom out and the economics become clear: compute is the scarce input. When you cap a scarce input, you create arbitrage, integrators, and repair ecosystems.

• Model

GenAI economics reward more compute. Controls raised the effective price of FLOPS in China. That pushed teams toward efficiency (compression, distillation) and creative procurement.

• Traction

China’s AI workload isn’t shrinking. Foundation models, recommenders, and vision systems need accelerators. When official channels tightened, shadow channels expanded.

• Valuation / Funding

Premiums on H100-class cards multiplied. Capital shifted from list-price buys to gray-market procurement, refurb, and remote rentals. Compute became an investment category, not just a cost line.

• Distribution

Distribution beat performance. Brokers with access, logistics, and repair capacity became kingmakers. Access—more than raw TFLOPS—set the pace for teams.

In AI hardware, the moat isn’t FLOPS—it’s the channels.

• Partnerships & Ecosystem Fit

Chinese state-backed buyers turned to domestic ecosystems. Huawei’s Ascend and other local players gained pull. Integration partners who could wire up software stacks and service fleets won share.

• Timing

Controls tightened just as GenAI demand spiked. The mismatch amplified premiums, birthed refurb capacity, and normalized overseas GPU rentals.

• Competitive Dynamics

- Nvidia vs. policy: firmware, compliance, and verification became product features.

- Domestic chips vs. gray market: near-term performance gap offset by guaranteed availability and political alignment.

- AMD’s entry and alternatives matter, but channels still decide who trains at scale.

• Strategic Risks

- Legal and compliance exposure for buyers and intermediaries.

- Reliability risks from refurbished or modified units.

- Software lockouts, audits, and sudden policy shifts.

- Vendor concentration and support gaps for non-official hardware.

Here’s the part most people miss: bans create integrators, not just smugglers.

What Builders Should Notice

- Plan for compute volatility. Diversify across vendors, geos, and generations.

- Efficiency is strategy. Smaller, distilled models with smart caching often win.

- Distribution is a moat. Secure commitments from multiple hardware channels.

- Treat repair and uptime as first-class. Spares, SLAs, and telemetry matter.

- Design for portability. Make training and inference migratable across stacks.

Buildloop reflection

Scarcity clarifies strategy. In AI, access compels invention.

Sources

- CNBC — Nvidia CEO disappointed after reports China has banned …

- Asia Times — China gets banned Nvidia AI chips via gray markets

- PC Gamer — Raising a digital digit to Nvidia, China reportedly set to ban …

- Tom’s Hardware — Underground China repair shops thrive servicing illicit …

- CTech by Calcalist — China’s AI chip gray market threatens US export restrictions

- The Wall Street Journal — China’s AI Engineers Are Secretly Accessing Banned …

- The New York Times — With Smugglers and Front Companies, China Is Skirting …

- Notebookcheck — Gray-market repairs for banned Nvidia H100 and A100 …

- SOPA Awards — Nvidia AI Chip Smuggling to China Becomes an Industry