What Changed and Why It Matters

AI in healthcare just crossed an important line. Health copilots are moving from generic Q&A to personal advisors grounded in your own data.

Microsoft signaled this shift with a healthcare‑safe bot for building copilots. Consumer tools promise “your own voice, your own knowledge.” Clinicians and patients are already testing AI against real health records.

Here’s the pattern: personal context, long‑term memory, and safety guardrails are becoming standard. The result is higher‑quality answers—and higher stakes.

“Azure AI Health Bot… provides built-in healthcare safeguards now in private preview, for building copilot experiences.”

“12 updates to its Copilot chatbot including long-term memory capabilities and health information tools.”

The Actual Move

Multiple signals point to personal health copilots going mainstream:

- Microsoft introduced Azure AI Health Bot with generative AI and healthcare safeguards in private preview. It’s built to power healthcare‑grade copilot experiences.

- Copilot is broadly available on PC, Mac, mobile, and web—positioned as a daily assistant.

“Copilot is your digital companion. It is designed to inform, entertain and inspire.”

- Microsoft’s Copilot picked up long‑term memory features and health information tools, underscoring a push toward persistent, contextual assistants.

- A Microsoft session showed healthcare copilot scenarios that streamline workflow and lift efficiency.

“See how these AI companions are revolutionizing workflow, enhancing efficiency.”

- The AMA framed AI as a physician “co‑pilot,” emphasizing safe deployment and phased use.

“Physicians and health IT should collaborate to monitor AI tools for safe use, starting with low-risk cases to cut administrative burdens.”

- Market roundups now catalog healthcare copilots that draft notes, speed diagnosis, and boost productivity.

“Leading AI copilots helping doctors write notes, speed up diagnosis, and boost efficiency.”

- On the consumer side, personal AI products market “your own model” grounded in your data.

“Drafts responses from within your own Personal AI model and in your own voice, grounded by your own knowledge.”

- Patients are experimenting with GPT‑4 to analyze their own medical data.

“Used GPT-4 as… co-pilot… to analyze his medical data and make connections.”

- A practical guide walks through building a personal “health advisor” that synthesizes data into evidence‑based plans.

“Act as a Personal Health Advisor that synthesizes your data into evidence‑based hypotheses and step‑by‑step plans.”

Taken together: distribution is ready, the guardrails are forming, and both clinicians and consumers are pushing for answers grounded in their own records.

The Why Behind the Move

Zoom out and the pattern becomes obvious: grounded, memory‑aware copilots plus strong safeguards will drive trust—and adoption.

• Model

- Retrieval from personal health data beats generic LLM output.

- Long‑term memory raises utility for longitudinal care and follow‑ups.

- Safety layers and domain constraints reduce risk and drift.

• Traction

- Clinical note‑taking and workflow copilots are gaining real use.

- Consumers want clarity from their own labs, wearables, and history.

• Valuation / Funding

- Capital follows adoption in repeatable workflows. Documentation and triage are low‑risk beachheads.

• Distribution

- Microsoft can ship copilots into existing clinician workflows and devices.

- Personal AI apps ride bring‑your‑own‑data and patient portals.

• Partnerships & Ecosystem Fit

- Health systems, EHRs, and data platforms become essential partners.

- Professional bodies (like AMA) shape safe rollout norms.

• Timing

- Better guardrails and memory make personal advisors viable.

- Patients now have easier access to digital records.

• Competitive Dynamics

- Big clouds (Microsoft, Google, AWS) battle on compliance, tooling, and channels.

- Startups differentiate on niche workflows, UX, and trust.

• Strategic Risks

- Hallucinations with high stakes; overconfidence harms patients.

- Data consent, portability, and privacy enforcement.

- Liability ambiguity between vendors, clinicians, and patients.

- Over‑automation without clinician oversight.

Here’s the part most people miss: the winning loop is trustful data rights + distribution, not model novelty.

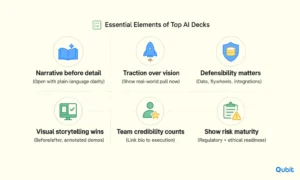

What Builders Should Notice

- Grounding + memory > raw model power. Personal context makes answers useful.

- Trust is the moat. Consent, audit trails, and explainability are product features.

- Start where risk is low. Documentation and post‑visit summaries earn adoption.

- Distribution beats features. Ship inside existing clinical and consumer channels.

- Design for co‑pilot, not autopilot. Human oversight should be the default path.

Buildloop reflection

“Trust is the new UX. Your data is the new moat.”

Sources

- Microsoft Azure Blog — Azure AI Health Bot helps create copilot experiences with …

- Medium — Owning My Health Data, With AI as Health Copilot

- Personal AI — Your True Personal AI | Personal AI for Everyone and in …

- Microsoft — Enjoy AI Assistance Anywhere with Copilot for PC, Mac …

- AI Magazine — Inside Microsoft’s Copilot Updates for Human-Centred AI

- Reddit — Someone successfully used GPT-4 as their co-pilot to help …

- YouTube — Experience the value of Copilot across healthcare | BRK257

- American Medical Association — How health AI can be a physician’s “co-pilot” to improve care

- Microsoft — Enjoy an AI Assistant Anywhere with Copilot for PC, Mac …

- Innovaccer — Top 5 AI Copilots & Agents for Healthcare in 2025