What Changed and Why It Matters

AI coding moved from pilot to default in 2025. Developers now expect code suggestions, PR summaries, test generation, and auto-fixes across their stack. Coverage is wide, conviction is mixed.

MIT Technology Review reports a split: some teams ship faster; others drown in low-quality code and style drift. Meanwhile, investors are treating AI-assisted coding as a high-upside infrastructure layer, with estimates pointing to trillions in annual value creation if adopted at scale.

Zoom out and the pattern becomes clear: productivity platforms—where teams already plan, write, review, and ship—are bundling or buying AI coding capabilities. Not to sell models, but to own the workflow, the data graph, and the daily active hours.

The moat isn’t the model. It’s owning where the work starts, lives, and gets measured.

The Actual Move

What platforms did through 2025 wasn’t one big deal—it was a roll-up of capabilities:

- Embedded AI copilots in IDEs tied to your backlog, docs, and runbooks

- Agent-driven refactors, PR reviews, and test generation wired into CI/CD

- Code search that understands systems, not just files, powered by vector indexes and graphs

- Security scanning with LLMs, catching unsafe patterns and dependency risks

- “All-hands” assistants that turn product specs, tickets, and logs into code suggestions

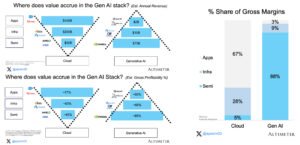

Andreessen Horowitz frames this as a new “AI software development stack” spanning assistants, agents, testing, security, and delivery. Economic Times coverage underscores why buyers lean in: value investors see durable economics in firms that translate AI into measurable developer throughput.

But there’s friction. MIT Sloan warns of a productivity trap—speed gains can mask mounting technical debt. A Reddit founder thread details the chaos: style divergence, duplicate logic, security regressions. Another analysis questions whether seasoned developers actually speed up with current tools.

Here’s the part most people miss: rolling out AI coding without process redesign often increases rework, not release velocity.

The Why Behind the Move

• Model

General models improved at reasoning and long-context planning, boosting code refactors and multi-file changes. Still, platforms realize the real edge is context: codegraphs, docs, tickets, and runbooks. Retrieval quality now matters as much as raw model power.

• Traction

There are ~30 million developers globally. Coding assistants are one of the fastest-adopted AI categories inside enterprises. Even with uneven output, weekly active usage is sticky when assistants live where work happens.

• Valuation / Funding

Investors treat AI coding as “picks and shovels” with large TAM and recurring monetization. Public and private market narratives favor durable, workflow-embedded tools over point solutions.

• Distribution

Productivity suites already own distribution: backlogs, docs, chat, reviews. Bundling AI coding reduces CAC, lifts ARPU, and increases seat expansion. It also raises switching costs—your code context and prompts live inside the platform.

• Partnerships & Ecosystem Fit

Expect deep alliances with model providers and IDE vendors, plus marketplaces for agents, test packs, and security rules. Platform APIs will expose codegraph context safely to third-party assistants.

• Timing

Reasoning-first models made multi-step coding viable. Combined with cheaper inference and better context windows, 2025 became the year platforms could ship end-to-end AI features—not just autocomplete.

• Competitive Dynamics

- Horizontal copilots vs tightly embedded, workflow-native assistants

- IDE-first players vs planning-and-collab suites

- Open-source stacks (for control) vs closed models (for speed)

• Strategic Risks

- Technical debt accumulation and style drift

- Security regressions and license contamination

- Over-reliance on single model vendors

- Developer trust erosion if review gates aren’t designed well

Strategy translation: Platforms are optimizing for workflow lock-in and measurable cycle-time compression, not just “AI features.”

What Builders Should Notice

- Own the context, not just the model. Your codegraph and docs are the advantage.

- Design the guardrails first: linting, tests, review gates, and secure defaults.

- Measure outcomes, not lines of code: cycle time, change failure rate, rework.

- Bundle where work lives. Distribution beats standalone novelty.

- Standardize style and patterns before scaling agents. Chaos compounds faster than speed.

Buildloop reflection

Every market shift begins as a workflow decision, not a demo.

Sources

MIT Technology Review — AI coding is now everywhere. But not everyone is convinced.

The Economic Times — Why AI-assisted coding firms are top value picks

Andreessen Horowitz — The Trillion Dollar AI Software Development Stack

Reddit — Our startup uses AI for everything. The chaos is killing …

MIT Sloan Management Review — AI Coding Tools: The Productivity Trap Most Companies Miss

YouTube — Why AI-Assisted Coding Startups Are Becoming the Hottest …

VKTR — Do AI Coding Tools Really Increase Developer Productivity …

M Accelerator — The 4-Day AI Workweek: How Startups Are Redefining …

Dynamic Business — Startups are pouring money into these 50 AI tools, and it …