What Changed and Why It Matters

Nvidia-backed Starcloud says it has fine-tuned an AI model in orbit using an H100 GPU. That’s a milestone. Not because the model was novel—but because where it ran was.

Orbital compute shifts the constraints. Terrestrial data centers face grid bottlenecks and soaring AI energy demand. Some estimates now project a 165% surge in power needs from AI alone. In space, the sun is a near-constant power source and cooling is physics-limited but predictable.

“Orbital data centers could run on practically unlimited solar energy without interruption from cloudy skies or nighttime darkness.”

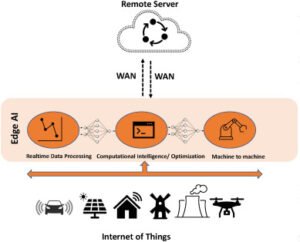

Here’s the part most people miss. Space changes the unit economics for certain workloads. Train or fine-tune models close to space-native data (Earth observation, comms telemetry, astronomy), beam back results—not raw data—and you save downlink costs, latency, and energy.

The Actual Move

Starcloud reportedly operated an NVIDIA H100 GPU in low Earth orbit and performed on-orbit model training—more precisely, a targeted fine-tune of an open model (reported as Google’s Gemma). The satellite ran on solar power and edge inference/training pipelines designed for radiation and thermal constraints.

- Hardware: A flight-qualified H100, hardened for radiation and temperature swings.

- Workload: On-orbit model fine-tuning rather than full pre-training. Inference and validation ran in-situ.

- Data: Process data locally (e.g., Earth imagery or telemetry), transmit only the distilled outputs.

- Power/Cooling: Solar arrays with radiative cooling—no water chillers, no grid interconnect.

The move fits a broader pattern. Multiple players are now racing to build “orbital clouds.”

- PowerBank + Smartlink AI announced Orbit AI—an autonomous in-orbit compute layer for AI workloads.

- Aetherflux floated orbital data centers tied to a space-based power grid strategy.

- New startups pitch a “Galactic Brain”—localized compute in sun-synchronous orbit sending back results only.

- Industry maps now track early leaders and use cases—from astronaut assistants to on-orbit anomaly detection.

- A technical white paper outlines modular scaling: compute, power, cooling, and networking assembled in orbit to reach gigawatt-class capacity.

“Satellites with localized AI compute, where just the results are beamed back from low-latency, sun-synchronous orbit, will be the lowest cost …”

“Orbit AI is creating the first truly intelligent layer in orbit — satellites that compute, verify, and optimize themselves autonomously.”

The Why Behind the Move

This is not about beating Earth on latency. It’s about energy, data gravity, and strategic resilience.

• Model

Space-native workloads love on-orbit fine-tuning and inference: Earth observation, space traffic awareness, satellite health, and comms optimization. You reduce downlink and get real-time actions.

• Traction

Public confirmation that an H100-class GPU can operate in orbit de-risks the hardware stack. It moves orbital compute from concept to first proof of viability.

• Valuation / Funding

Nvidia backing signals strategic relevance: wherever GPU demand grows, Nvidia follows. Expect investor interest tied to power arbitrage (solar in space) and data gravity (EO and comms data).

• Distribution

Distribution runs through space operators, satellite bus providers, and ground segment partners. Downlink bandwidth becomes a feature, not a bottleneck, when only results are sent back.

• Partnerships & Ecosystem Fit

Winners will pair with launch providers, bus manufacturers, solar and radiator suppliers, and ground stations. Model providers and defense/EO partners complete the loop.

• Timing

- AI energy demand is outpacing grid build-outs.

- Solar in space is abundant and persistent.

- Launch costs are falling; rideshare and modular assembly are maturing.

- GPU supply remains tight; new venues with better energy economics matter.

• Competitive Dynamics

Early credibility matters. Starcloud’s on-orbit H100 plus newcomers like Orbit AI and Aetherflux create an ecosystem narrative: orbital compute is investable. Expect hyperscalers to explore joint ventures as workloads mature.

• Strategic Risks

- Space environment: radiation, single-event upsets, thermal cycling, micrometeoroids, debris.

- Ops: in-orbit maintenance, repair, and module replacement.

- Economics: launch, insurance, and replacement cycles must pencil against terrestrial TCO.

- Regulatory: export controls, remote sensing rules, spectrum allocations, and cross-border data.

- Product fit: interactive, latency-sensitive apps still prefer Earth.

What Builders Should Notice

- Build where the physics help you. Space offers power and data advantages for specific AI jobs.

- Move the compute to the data. It’s cheaper than moving the data to the compute.

- Fine-tuning beats pretraining in constrained environments. Ship targeted updates, not monoliths.

- Modularity compounds. Design for replacement, repair, and incremental scaling.

- Regulation is a feature. Compliance creates durable moats in dual-use markets.

Buildloop reflection

“Moats form where constraints flip. Space turns energy, data, and distance into advantages.”

Sources

- CNBC — Nvidia-backed Starcloud trains first AI model in space …

- Scientific American — Data Centers in Space Aren’t as Wild as They Sound

- Reddit — Nvidia backed Starcloud successfully trains first AI in space …

- Medium — The First NVIDIA H100 in Space: Why Starcloud Just …

- Interesting Engineering — US firm’s space-based data centers, power grid to …

- GitHub Pages — Why we should train AI in space – White Paper

- PowerBank — PowerBank and Smartlink AI (“Orbit AI”) to Launch the First …

- Space.com — Startup announces ‘Galactic Brain’ project to put AI data …

- Cutter Consortium — On-Orbit Data Centers: Mapping the Leaders in Space- …