What Changed and Why It Matters

Silicon Valley is in open tension with AI safety advocates. Money, policy, and optics now shape the field as much as research.

“Nearly all funding for AI safety research comes from Silicon Valley companies racing to develop AI, as the voices of AI ‘doomers’ fade…”

That’s the new baseline: Big Tech controls the safety purse. Startups are also pushing back on regulation. Political spending is rising. The ideology around AI’s role in society is hardening.

“Silicon Valley’s attempts to fight back against safety-focused groups may be a sign that they’re working.”

Zoom out and a pattern appears. Investors want credible safety work without getting trapped in U.S. culture wars. That’s why founders from outside the Valley—especially the Balkans—are getting attention. They bring technical rigor, cost discipline, and fewer political labels.

Here’s the part most people miss: global founder geography is now a strategy for AI safety, not just a talent search.

The Actual Move

What’s actually happening across the ecosystem:

- Control of safety capital

- Techmeme notes that most AI safety funding now comes from the same companies racing to ship foundation models.

- This concentrates agenda-setting power with labs and their backers.

- Political escalation

- TechBuzz AI reports Silicon Valley is pouring money into AI-focused PACs to shape a “light-touch” policy environment.

- A16z’s public stance reflects a broader realignment toward pro-industry, pro-defense technology.

- Regulatory pushback

- MediaNama reports 140 AI startups opposed California’s AI safety bill, arguing it could “undermine competition” and entrench incumbents.

- Movement dynamics

- The New York Times describes a rationalist current that believes AI can deliver a better life—if we don’t destroy ourselves first.

- TechCrunch and Yahoo report rising friction: safety groups face counter-campaigns and reputational challenges from Valley actors.

- Incentive shift

- Economist Global highlights a tilt toward product over research: profits first, safety second.

- Founder geography as de-risking

- A widely shared Medium essay contrasts Balkan frugality and execution with Valley narrative and speed, a cultural mix that investors value in an ambiguous, politicized moment.

“AI research takes a backseat to profits as Silicon Valley prioritizes products over safety, experts say…”

“They allege that certain groups are acting out of self-interest or under the influence of powerful figures. This has led to increased tension…”

“Palmer Luckey got fired from Meta for backing the wrong candidate—now he’s the hero saving American defense, and that shift tells you…”

The Why Behind the Move

The calculus from a builder–investor lens:

• Model

Safety now sits downstream of model commercialization. Funding follows ship cadence. Outsider teams that can run independent evaluations, red-teaming, and governance tooling—without culture-war baggage—are attractive.

• Traction

Demand is real for safety infrastructure that shortens sales cycles: evals, incident response, policy-grade logs, model governance APIs. Balkan teams often bring pure engineering focus and low burn—good for landing early pilots.

• Valuation / Funding

With labs and their allies writing checks, independence is a signaling problem. Backing globally diverse teams helps investors show pluralism while keeping optionality. Lean cost bases extend runway and reduce dilution pressure.

• Distribution

Safety sells through trust. Credible work with academics, regulators, and open benchmarks beats glossy decks. Founders outside SF can win on proof, not proximity—especially if they publish and interop with existing lab pipelines.

• Partnerships & Ecosystem Fit

The fastest path to users: integrations with MLOps, eval harnesses, and cloud governance tools. Partnerships with labs on pre-deployment testing create unavoidable distribution.

• Timing

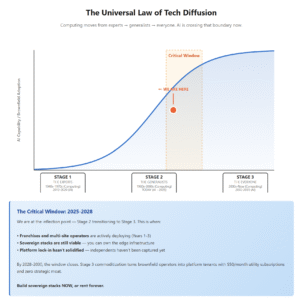

Elections, state bills, and corporate AI risk programs are colliding. Procurement is shifting from “AI pilots” to “AI controls.” Buyers want controls that are apolitical, auditable, and priced sanely.

• Competitive Dynamics

Incumbent labs bundle their own safety story. Independent teams win by being model-agnostic, regulator-friendly, and interoperable. Balkan founders’ cost rigor lets them undercut bundled offerings.

• Strategic Risks

- Perception of capture if funding is too close to labs

- Compliance complexity across jurisdictions

- Being outpaced by capability advances that make today’s controls obsolete

- Policy shocks (e.g., over-broad state bills) that raise barriers for startups

“140 Silicon Valley AI startups have written a letter criticizing the California AI safety bill, alleging it could undermine competition.”

What Builders Should Notice

- Trust is the moat. Publish methods, not manifestos. Benchmarks beat branding.

- Interop wins. Build for multi-model, multi-cloud, audit-first workflows.

- Cost is strategy. Low burn lets you outlast policy cycles and hype cycles.

- Go where buyers are. Security, risk, and compliance teams now own budget.

- Politics is a distribution channel—use it carefully or avoid it entirely.

Buildloop reflection

Clarity compounds when you ship proof over posture.

Sources

- TechCrunch — Silicon Valley spooks the AI safety advocates

- Startup Ecosystem — Silicon Valley’s Tensions with AI Safety Advocates

- The New York Times — The Rise of Silicon Valley’s Techno-Religion

- Techmeme — Nearly all funding for AI safety research comes from Silicon …

- Economist Global — AI research takes a backseat to profits as Silicon Valley prioritizes products over safety, experts say

- Yahoo News — Silicon Valley spooks the AI safety advocates

- MediaNama — Silicon Valley AI Startups Slam California AI Safety Bill

- YouTube — Ben Horowitz & Marc Andreessen: Why Silicon Valley Turned …

- Medium — Balkan mindset vs Silicon Valley practice | by Anita Kirkovska

- TechBuzz AI — Silicon Valley Pours $100M Into AI PACs for Midterm Influence